What Makes Amplitude Encoding So Attractive For Certain Algorithms

The hidden cost that decides whether amplitude encoding works for you

Do you believe that amplitude encoding is about saving qubits or compressing data?

Think again. Because this assumption is incorrect. And it leads to poor algorithm choices.

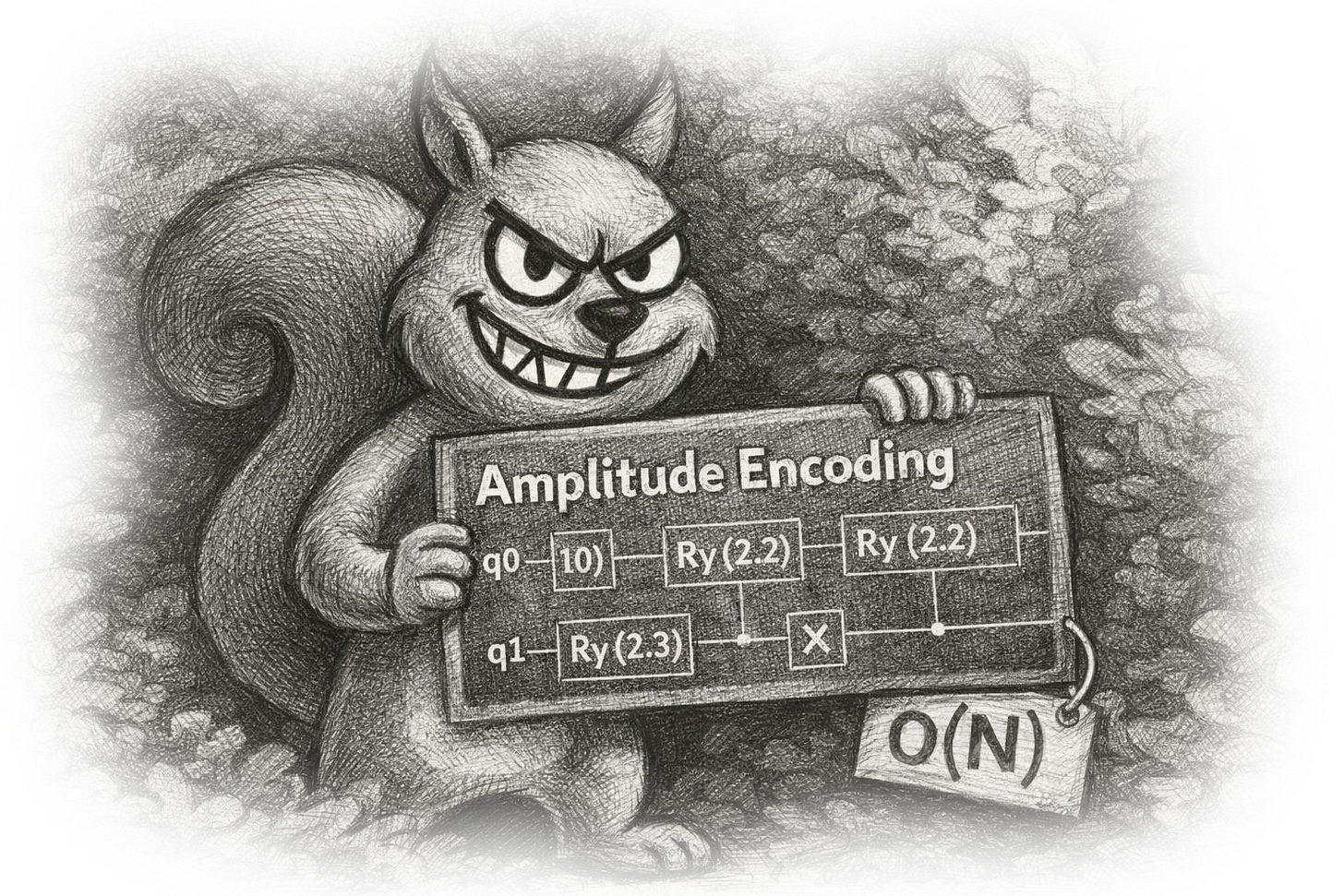

Amplitude encoding does not make loading data cheaper. Loading an N-dimensional vector in amplitudes typically costs O(N), the same as a classical scan. But at this point, no quantum acceleration has taken place yet. The only reason to pay this price is if the rest of the algorithm asks questions that classical code cannot answer efficiently.

What actually matters in practice:

You give up efficient access to individual entries. The question “What is xi?” collapses the state and destroys the data.

You only get global answers: norms, overlaps, projections, probabilities. But you don’t get complete output vectors.

The results are probabilistic by nature. Exact answers per coordinate negate any advantage.

Many problems fail here: if you need partial verification, debugging, or reconstruction, amplitude encoding fails.

But when do you actually want to use it?

Read the full post here.